The Massachusetts Institute of Technology’s (MIT) AgeLab will build and analyze new deep-learning-based perception and motion planning technologies for automated vehicles (AV) in partnership with the Toyota Collaborative Safety Research Center (CSRC).

The new research initiative is part of Toyota’s five-year ‘CSRC Next’ program with leading academic institutions, which was launched earlier this month. Part of the MIT Center for Transportation and Logistics, AgeLab has an ongoing relationship with Toyota, with its first phase of projects with CSRC studying how drivers respond to the increasing complexity of the modern operating environment. The research eventually contributed to the redesign of the instrumentation of the current Toyota Corolla and the forthcoming 2018 Camry models.

For the next phase of the CRSC program, a team led by AgeLab research scientist Lex Fridman, is working on computer vision, deep learning, and planning algorithms for semi-autonomous vehicles. The application of deep learning is being used for understanding both the world around the car and human behavior inside it. The team have set up a stationary camera at a busy intersection on the MIT campus to automatically detect the micro-movements of pedestrians as they make decisions about crossing the street.

Using deep learning and computer vision methods, the system automatically converts the raw video footage into millisecond-level estimations of each pedestrian’s body position. The program so far has analyzed the head, arm, feet and full-body movement of more than 100,000 pedestrians. With Toyota and other partners, the team is also exploring the use of cameras positioned to monitor the driver, as well as methods to extract all those driver state factors from the raw video and turn them into usable data that can support future automotive industry needs.

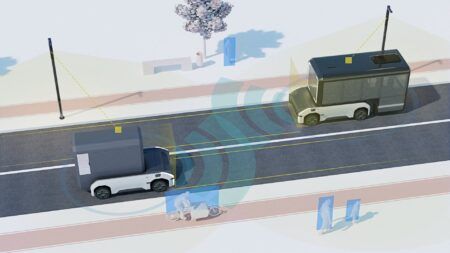

“The vehicle must first gain awareness of all entities in the driving scene, including pedestrians, cyclists, cars, traffic signals, and road markings,” explained Fridman. “We use a learning-based approach for this perception task, and also for the subsequent task of planning a safe trajectory around those entities. Just as interesting and complex is the integration of data inside the car to improve our understanding of automated systems and enhance their capability to support the driver. This includes everything about the driver’s face, head position, emotion, drowsiness, attentiveness, and body language.”

Toyota CSRC director Chuck Gulash, said, “The research will contribute to better computer-based perception of a vehicle’s environment, as well as social interactions with other road users.”