With aim of bringing more human-like reasoning to autonomous vehicles (AVs), researchers at the Massachusetts Institute of Technology (MIT) have created a system that uses only simple maps and visual data to enable driverless cars to navigate routes in new, complex environments.

Human drivers are exceptionally good at navigating roads they have not driven on before, using observation and simple tools. We simply match what we see around us to what we see on our GPS devices to determine where we are and where we need to go. Driverless cars, however, struggle with this basic reasoning. In every new area, the cars must first map and analyze all the new roads, which is very time consuming. The systems also rely on complex maps, usually generated by 3-D scans, which are computationally intensive to generate and process on the fly.

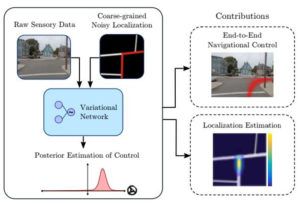

In a new paper, the MIT researchers describe an autonomous control system that ‘learns’ the steering patterns of human drivers as they navigate roads in a small area, using only data from video camera feeds and a simple GPS-like map. Then, the trained system can control a driverless car along a planned route in a brand-new area, by imitating the human driver. Similar to a human driver, the system also detects any mismatches between its map and features of the road. This helps the system determine if its position, sensors, or mapping are incorrect, in order to correct the car’s course. To train the system initially, a human operator controlled a driverless Toyota Prius equipped with several cameras and a basic GPS navigation system, collecting data from local suburban streets including various road structures and obstacles. When deployed autonomously, the system successfully navigated the car along a preplanned path in a different forested area, designated for AV tests.

In a new paper, the MIT researchers describe an autonomous control system that ‘learns’ the steering patterns of human drivers as they navigate roads in a small area, using only data from video camera feeds and a simple GPS-like map. Then, the trained system can control a driverless car along a planned route in a brand-new area, by imitating the human driver. Similar to a human driver, the system also detects any mismatches between its map and features of the road. This helps the system determine if its position, sensors, or mapping are incorrect, in order to correct the car’s course. To train the system initially, a human operator controlled a driverless Toyota Prius equipped with several cameras and a basic GPS navigation system, collecting data from local suburban streets including various road structures and obstacles. When deployed autonomously, the system successfully navigated the car along a preplanned path in a different forested area, designated for AV tests.

To create an ‘end-to-end’ navigation and operating capability, the researcher team trained their system to predict a full probability distribution over all possible steering commands at any given instant while driving. The system uses a machine learning model called a convolutional neural network (CNN), commonly used for image recognition. During training, the system watches and learns how to steer from a human driver. The CNN correlates steering wheel rotations to road curvatures it observes through cameras and an inputted map. Eventually, it learns the most likely steering command for various driving situations, such as straight roads, four-way or T-shaped intersections, forks, and rotaries. When driving, the system extracts visual features from the camera, which enables it to predict road structures, and at each moment, it uses its predicted probability distribution of steering commands to choose the most likely one to follow its route.

“Our objective is to achieve autonomous navigation that is robust for driving in new environments,” explained co-author Daniela Rus, director of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). “For example, if we train an autonomous vehicle to drive in an urban setting such as the streets of Cambridge, the system should also be able to drive smoothly in the woods, even if that is an environment it has never seen before.”

The paper was written by Rus, Alexander Amini and Sertac Karaman at MIT, plus Guy Rosman, from the Toyota Research Institute.