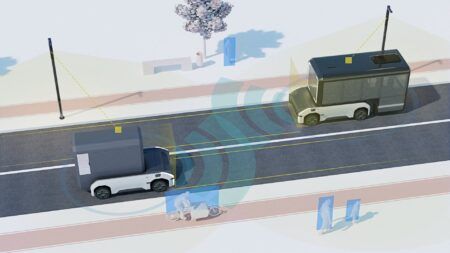

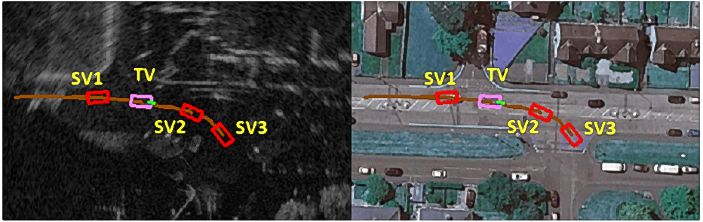

Sensors that operate in Scotland’s rain, snow and fog are providing data that could help autonomous vehicles see and operate safely in adverse weather. The Radiate project led by Heriot-Watt University has published a new dataset that includes three hours of radar images and 200,000 tagged road actors including other vehicles and pedestrians.

The dataset solves a problem that has been facing manufacturers and researchers of autonomous vehicles. Until now, almost all the available, labelled data has been based on sunny, clear days. This meant there was no public data available to help develop autonomous vehicles that can operate safely in adverse weather conditions. It has also relied primarily on data collected from optical sensors, which, much like human vision, do not work as well during bad weather.

Professor Andrew Wallace and Dr Sen Wang have been collecting the data since 2019, when they kitted-out a van with light detection and ranging (LiDAR), radar and stereo cameras, and geopositioning devices. They drove the car around Edinburgh and the Scottish Highlands to capture urban and rural roads at all times of day and night, purposefully chasing bad weather.

“Datasets are essential to developing and benchmarking perception systems for autonomous vehicles,” says Wallace. “We’re many years from driverless cars being on the streets, but autonomous vehicles are already being used in controlled circumstances or piloting areas. We’ve shown that radar can help autonomous vehicles to navigate, map and interpret their environment in bad weather, when vision and LiDAR can fail.”

The team says by labelling all the objects their system spotted on the roads they’ve provided another step forward for researchers and manufacturers.

“We labelled over 200,000 road objects in our dataset – bicycles, cars, pedestrians, traffic signs and other road actors,” explains Wang. “We could use this data to help autonomous vehicles predict the future and navigate safely. When a car pulls out in front of you, you try to predict what it will do – will it swerve, will it take off? That’s what autonomous vehicles will have to do, and now we have a database that can put them on that path, even in bad weather.”

The team is based at Heriot-Watt’s Institute of Sensors, Signals and Systems, which has already developed classical and deep learning approaches to interpreting sensory data. They say their ultimate goal is to improve perception capability.

“We need to improve the resolution of the radar, which is naturally fuzzy,” says Wallace. “If we can combine hi-resolution optical images with the weather-penetrating capability of enhanced radar that takes us closer to autonomous vehicles being able to see and map better, and ultimately navigate more safely.”