A new open dataset from researchers at Chalmers University of Technology, Sweden, sets a new standard for evaluating the algorithms of self-driving vehicles. It will also accelerate the development of autonomous transport systems on roads, water and in the air.

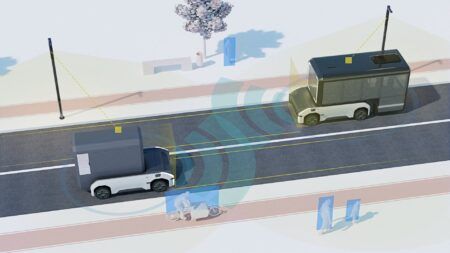

For self-driving vehicles to work, they need to interpret and understand their surroundings. To achieve this, they use cameras, sensors, radar and other equipment, to ‘see’ their environment. This form of artificial perception enables them to adapt their speed and steering, in a way similar to how human drivers react to changing conditions in their surroundings. In recent years, researchers and companies around the world have competed over which software algorithms provide the best artificial perception. To help, they use huge datasets that contain recorded sequences from traffic environments and other situations. These datasets are used to verify that the algorithms work as well as possible and that they are interpreting situations correctly.

Now, Chalmers University of Technology, Sweden has launched a new open dataset called Reeds, in collaboration with the University of Gothenburg, Rise (Research Institutes of Sweden), and the Swedish Maritime Administration. The dataset is now available to researchers and industry worldwide.

The dataset provides high quality and accurate recorded surroundings of the test vehicle. To create the most challenging conditions possible – and thus increase the complexity of the software algorithms – the researchers chose to use a boat, where movements relative to the surroundings are more complex than for vehicles on land. This means that Reeds is the first marine dataset of this type.

“The goal is to set a standard for development and evaluation of tomorrow’s fully autonomous systems,” says Ola Benderius, associate professor at the department of mechanics and maritime Sciences at Chalmers University of Technology, who is leading the project. “With Reeds, we are creating a dataset of the highest possible quality, that offers great social benefit and safer systems.”

Benderius hopes that the dataset will represent a breakthrough for more accurate verification to increase the quality of artificial perception.

The dataset has been developed using an advanced research boat that travels predetermined routes around western Sweden, under different weather and light conditions. The tours will continue for another three years and thus the dataset will grow over time. The boat is equipped with highly advanced cameras, laser scanners, radar, motion sensors and positioning systems, to create a comprehensive picture of the environment around the craft.

Technical standards and advanced AI

The camera system on the boat contains the latest in camera technology, generating six gigabytes of image data per second. A 1.5-hour trip thus provides 16 terabytes of image data. It also provides ideal conditions for verification of artificial perception in the future.

“Our system is of a very high technical standard,” says Benderius. “It allows for a more detailed verification and comparison between different software algorithms for artificial perception – a crucial foundation for AI.”

During the project, Reeds has been tested and further developed by other researchers at Chalmers, as well as specially invited international researchers. They have worked with automatic recognition and classification of other vessels, measuring their own ship’s movements based on camera data, 3D modeling of the environment and AI-based removal of water droplets from camera lenses.

Reeds also provides the conditions for fair comparisons between different researchers’ software algorithms. The researcher uploads their software to Reeds’ cloud service, where the evaluation of data and comparison with other groups’ software takes place completely automatically. The results of the comparisons are published openly, so anyone can see which researchers around the world have developed the best methods of artificial perception in different areas. This means that large amounts of raw data will gradually accumulate, and the data will be analysed continuously and automatically in the cloud service. Reeds’ cloud service thus provides the conditions for both collaboration and competition between research groups, meaning that over time artificial perception will increase in complexity for all types of self-driving systems.

The project began in 2020 and has been run by Chalmers University of Technology in collaboration with the University of Gothenburg, Rise and the Swedish Maritime Administration. The Swedish Transport Administration is funding the project.