Mobility researchers at the University of Michigan (U-M) have devised a new way to test autonomous vehicles (AVs) that bypasses the billions of miles they would need to log for consumers to consider them road-ready.

The process, which was developed using data from more than 25 million miles (40 million kilometers) of real-world driving, can significantly cut the time required to evaluate self-driving vehicles’ handling of potentially dangerous situations, which could save 99.9% of testing time and costs, according to the team. The researchers have outlined the approach in a new white paper published by Mcity, a U-M-led public-private partnership to accelerate advanced mobility vehicles and technologies.

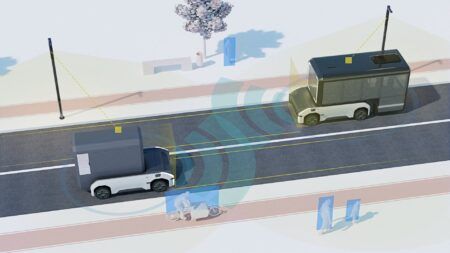

The new accelerated evaluation process breaks down difficult real-world driving situations into components that can be tested or simulated repeatedly, exposing AVs to a condensed set of the most challenging driving situations. In this way, just 1,000 miles of testing can yield the equivalent of 300,000 to 100 million miles of real-world driving. However, it is not enough to certify the safety of an AV, because the difficult scenarios they need to zero in on are rare. A crash that results in a fatality occurs only once in every 100 million miles of driving. For consumers to get the confidence levels to accept driverless vehicles, researchers say test vehicles would need to be driven in simulated or real-world settings for 11 billion miles.

The researchers analyzed data from 25.2 million miles of real-world driving collected by two U-M Transportation Research Institute projects: Safety Pilot Model Deployment and Integrated Vehicle-Based Safety Systems, which involved nearly 3,000 vehicles and volunteers over two years. From that data, the team:

Identified events that could contain ‘meaningful interactions’ between an AV and human-driven vehicle, and created a simulation that replaced all the uneventful miles with meaningful interactions;

Programmed their simulation to consider human drivers the major threat to AVs and placed them randomly throughout;

Conducted mathematical tests to assess the risk and probability of certain outcomes, including crashes, injuries, and near-misses;

Interpreted the accelerated test results, using ‘importance sampling’ to learn how the AV would perform, statistically, in everyday driving situations.

“Test methods for traditionally driven cars are something like having a doctor take a patient’s blood pressure or heart rate, while testing for automated vehicles is more like giving someone an IQ test,” said Ding Zhao, U-M research scientist and co-author of the white paper.