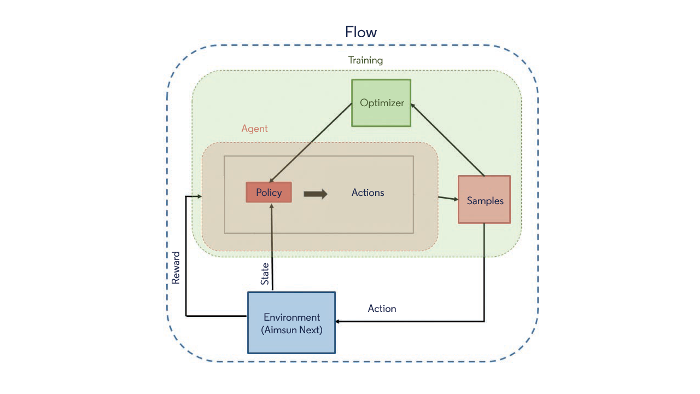

A new partnership between transport software developer Aimsun and the University of California at Berkeley’s Institute of Transportation Studies has resulted in the release of Flow, a tool for managing large-scale traffic systems with a mix of human-driven and autonomous vehicles (AVs).

Flow is the first open source architecture to integrate microsimulation tools with state-of-the-art deep reinforcement learning libraries in the cloud – in this case using the Amazon Web Services Elastic Compute Cloud (AWS EC2). In an environment with a mix of human-driven and automated vehicles, it is a major challenge to learn how to make good decisions regarding, for example, AV control or traffic signal control, especially when trying to predict complex human behaviors and the potential impact of self-driving vehicles on traffic. However, recent developments in multi-agent deep reinforcement learning algorithms, advances in Aimsun Next microscopic traffic simulation libraries, and improved ease of cloud computing such as AWS, together provide a powerful ecosystem for finding near-optimal decision-making rules such as AV acceleration, AV lane changing, or traffic signal splits in mixed autonomy systems.

Originally launched in September 2018, with benchmarks released in November at the Conference on Robot Learning (CoRL), Flow now also integrates Aimsun’s Next traffic modeling software. With this new release of Flow, Next users can now benefit from a new environment, with access to state-of-the-art deep reinforcement learning libraries in the cloud without the requirement of building their own machine learning infrastructure. A Siemens company since last year, Aimsun will be presenting Flow at the Transportation Research Board Annual Meeting in Washington DC this week, alongside representatives from the UC Berkeley team.

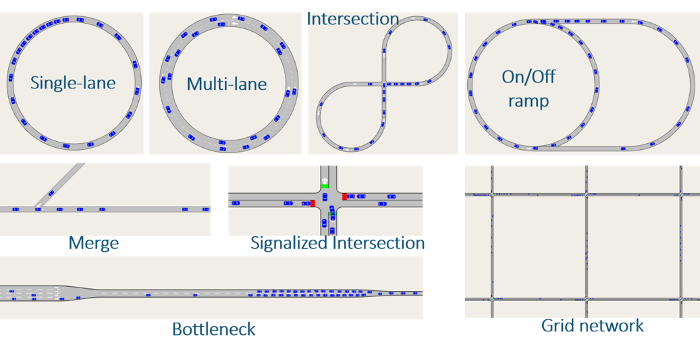

Flow is a traffic control framework that provides a suite of prebuilt traffic control scenarios, tools for designing custom traffic scenarios, and integration with deep reinforcement learning libraries such as RLlib and traffic microsimulation libraries. Engineers can use Flow to apply deep reinforcement learning breakthroughs to various cases in traffic management, which involve classic traffic infrastructure such as traffic lights, metering, etc, and mobile infrastructure such as mixed autonomy traffic, in particular using connected and automated vehicles (CAVs) to regulate traffic.

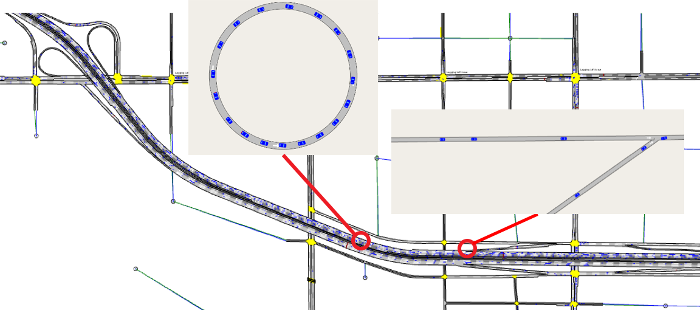

Flow is a tool for exploring numerous case studies using a building-block type of approach, such as the use of modular benchmark cases that can be assembled. Users can build modular traffic-scenarios, which can be combined to tackle complex situations. These building-blocks help break a problem down into smaller, tractable pieces that can be composed as controllers for new scenarios. For instance, single-lane/multilane and merge building blocks can be used to study stop-and-go and merging traffic behaviors along a highway.

Flow is a tool for exploring numerous case studies using a building-block type of approach, such as the use of modular benchmark cases that can be assembled. Users can build modular traffic-scenarios, which can be combined to tackle complex situations. These building-blocks help break a problem down into smaller, tractable pieces that can be composed as controllers for new scenarios. For instance, single-lane/multilane and merge building blocks can be used to study stop-and-go and merging traffic behaviors along a highway.

In mixed-autonomy traffic control, evaluating proposed RL methods in the literature is often difficult, largely due to the lack of a standardized testbed. Systematic evaluation and comparison will not only further our understanding of the strengths of existing algorithms, but also reveal their limitations and suggest directions for future research. Flow provides benchmarks in the use of deep reinforcement learning to create controllers for mixed-autonomy traffic, where CAVs interact with human drivers and infrastructure.