Audi has been exhibiting an innovative pre-development project that uses a mono camera and neural networks to generate an extremely precise 3D model of a vehicle’s environment, at the world’s most important symposium for artificial intelligence (AI) in Long Beach, California.

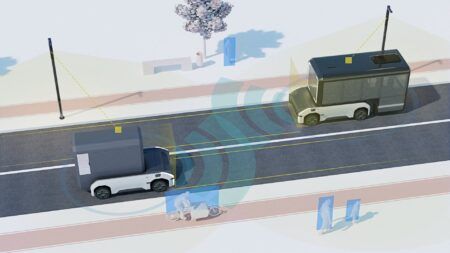

A project team from the Audi subsidiary Audi Electronics Venture (AEV) has been presenting a mono camera at the Conference and Workshop on Neural Information Processing Systems (NIPS) that uses AI to generate an extremely precise 3D model of the environment, making it possible to capture the exact surroundings of an autonomous car. A conventional mono front camera acts as the sensor, which captures the area in front of the car within an angle of about 120° and delivers 15 images per second at a resolution of 1.3MP. These images are then processed in a neural network, where ‘semantic segmenting’ occurs, in which each pixel is classified into one of 13 object classes. This enables the system to identify and differentiate other cars, trucks, houses, road markings, people, and traffic signs.

The system also uses neural networks for distance information. The visualization is performed here via ISO lines; virtual boundaries that define a constant distance. This combination of semantic segmenting and estimates of depth produces a precise 3D model of the actual environment. Audi engineers had previously trained the neural network with the help of ‘unsupervised learning’, which, in contrast to supervised learning, is a method of learning from observations of circumstances and scenarios that does not require pre-sorted and classified data. The neural network received numerous videos of road situations that had been recorded with a stereo camera. As a result, the network learned to independently understand rules, which it uses to produce 3D information from the images of the mono camera.

The system was demonstrated using the new Audi A8, which was the first car in the world developed for conditional automated driving at SAE Level 3, using the company’s AI traffic jam pilot to handle the task of driving in slow-moving traffic up to 37mph (60km/h). At NIPS, another Audi team from the company’s Electronics Research Laboratory in Belmont, California, demonstrated a solution for purely AI-based parking and driving in parking lots and on highways. In this process, lateral guidance of the car is completely carried out through neural networks, where the AI learns to independently generate a model of the environment from camera data and to steer the car. This approach requires no highly-precise localization or map data.