Amazon’s Rekognition service and Swedish startup Mapillary have teamed up to use both of their technologies to help US cities manage their parking signage and residents find parking spaces easier within congested urban areas.

Managing parking assets and infrastructure is a billion-dollar problem for cities across the USA. There has been no easy way for cities and Departments of Transportation (DOTs) to access parking sign data, resulting in poor decisions around parking infrastructure and planning.

City authorities have typically gone out on foot to capture images of parking signs before analyzing them manually. This is not a scalable or cost-effective way of managing parking infrastructure, which is why comprehensive parking data is unavailable in many areas of the world.

As an example, Mapillary cites Washington DC, which has a portfolio of more than 206,700 signs and 123,087 sign posts, with the District DOT and Department of Public Works receiving one to two reports on conflicting parking signs daily, and each review taking four months for city officials to address.

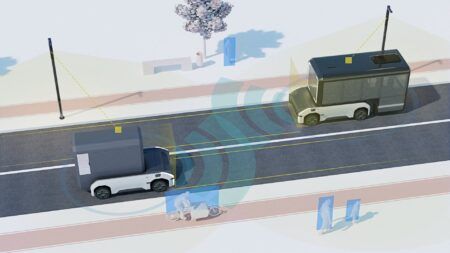

To avoid this confusion, Mapillary and Amazon Rekognition are introducing a scalable way to help US cities audit and manage their parking infrastructure. Amazon Rekognition is a deep learning-based image and video analysis service that will be able to extract parking sign data from the 360 million crowdsourced street-level images that have been contributed to the Mapillary platform, which is used to create better maps without being tied to any particular mapping platform.

With a recognition rate of 98%, Mapillary’s traffic sign recognition algorithm detects 1,500 classes of global traffic signs. Combining this with Amazon Rekognition’s Text-in-Image feature, the company will now be able to extract the text from detected parking signs across the USA to add additional important details.

When images are uploaded by contributors to the Mapillary platform, the company’s award winning semantic segmentation model automatically finds parking signs within the images. Once detected, Amazon’s Text-in-Image feature is applied to extract the text within each sign. The integrated system is a computer vision-powered and automated solution to the problem of managing parking sign data, with instant access to information that previously took months or years to collect and assimilate.

Earlier this year the company launched Mapillary for Organizations to give GIS (geographic information system) and city planning teams the ability to subscribe to map data in an automated way. The partnership with Amazon Rekognition will mean that parking sign data will now be included as part of that offering.

The company says that it will also be applying text recognition to more objects that are detected in images contributed to the Mapillary platform, enriching the overall dataset. Participating city authorities will also be invited to contribute their own imagery to the platform, gathered from smartphones, action cameras or dash-cams, in order to gain additional insight of their inventory assets.